Retina Modelisation from a Camera

This section describe a simulation of human retina from a standard camera (smartphone like cameras). I assume you already have read the page The retina. The output of this simulation are two 2D matrices representing the pulses frequencies going through the optic nerve to the brain. These two outputs represent the two pathways in the brain: parvo and magno.

To achieve this, we first convert the pixel image to cones output respecting as much as possible the distribution of the different cones along the eccentricity of the retina.

Then we directly simulate the ganglionar cells frequencies outputs, from the cones result, considering their receptive fields.

Plan

- Retina Modelisation from a Camera

- Photo Receptors Simulation

- Bipolar cell modelisation ?

- Ganglionar cells modelisation (redaction ongoing)

- Notes

- Biblio

- Annex

Photo Receptors Simulation

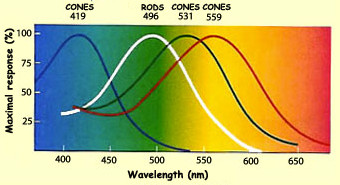

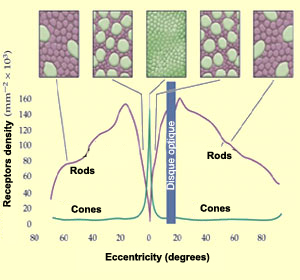

As you may remember there are around 125 million photoreceptors in the retina: 120 million rods and between 4 and 5 million cones. As rods are saturated during the day, I won’t consider them here. I will only simulate cones.

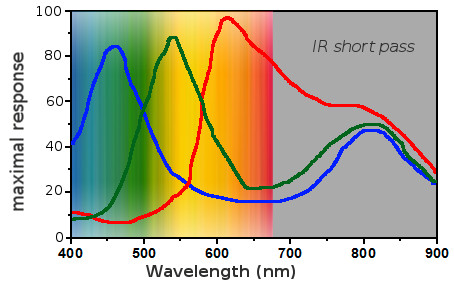

Several technologies can capture light, for example the CMOS sensor (complementary metal-oxide semiconductor), which converts light to electrons. I won’t detail here how it works but I want to show you the response of the pixels to light. It looks like a lot like cones but the sensitivity peaks are shifted.

A typical 4K phone camera has a resolution of 8.29 Million RGB pixels (3840 x 2160 x 3). Simulate 5 Million cones with 8.29 Million pixels seems enough, however, it is not. The main issue is to consider the repartition of the cones inside the retina!

Pixel density

The pixel density per mm² is constant in a camera. They form a grid with a constant space between them. This density cannot be directly compared with the cone density because the focal length is not the same in the eye. One solution to get rid of this issue, is to work with densities per degree².

To get the pixel density we need to get the focal length of the camera in pixel units.

Estimate camera focal and field of view

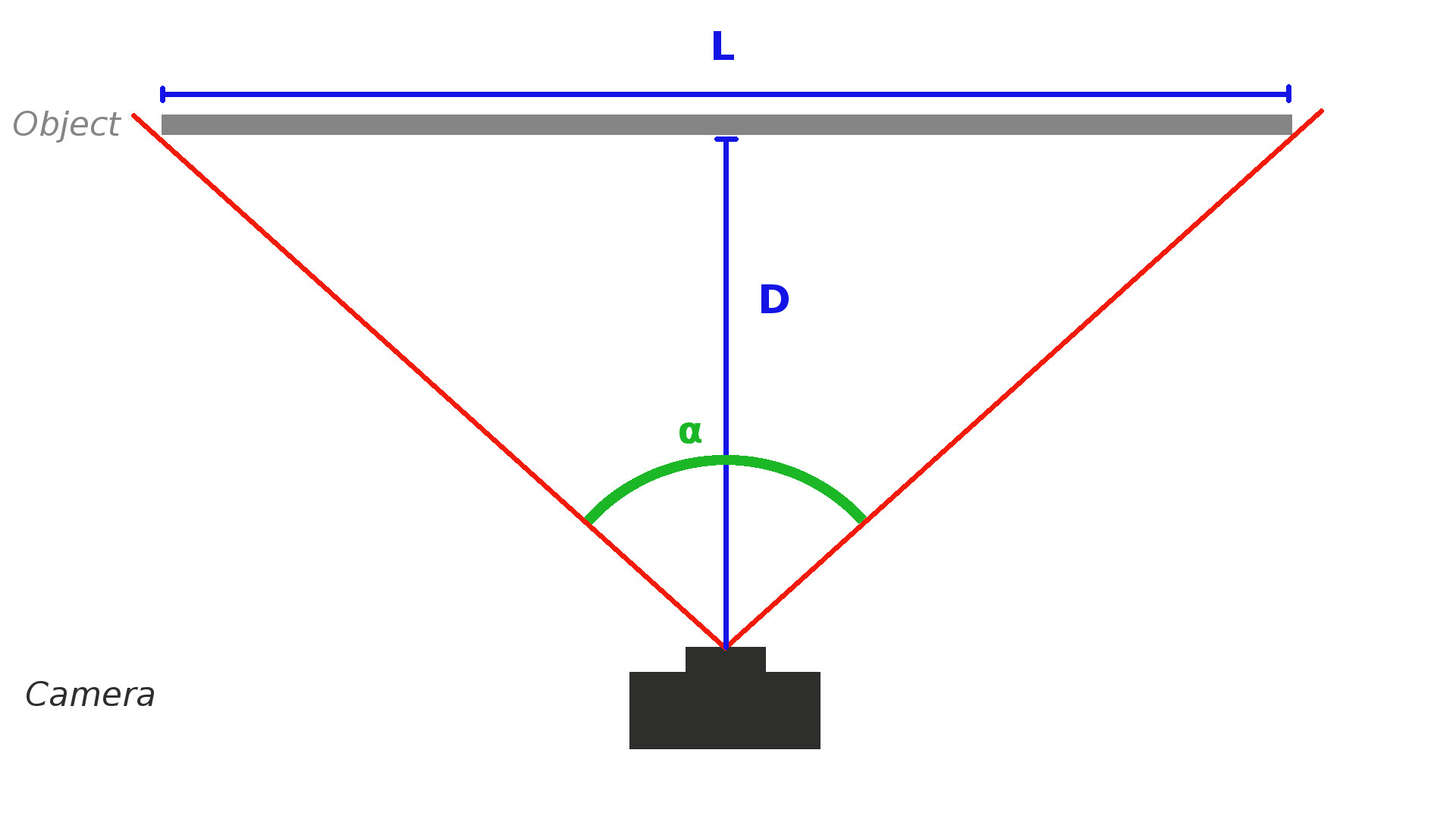

If like me, you cannot find the focal length of your camera nor the field of view, you can estimate it with the procedure below.

First, choose an object, put your camera so that the object takes exactly the width of your camera view. Note the values L and D.

Then by using simple trigonometry relations $(1) (2)$ you can determine the field of view $\alpha$

\begin{equation}\tan(\frac{\alpha}{2}) = \frac{L}{2D}\end{equation} with:

- $\alpha$ being the field of view

- $L$ Object size

- $D$ Distance to Object

from $(1)$ we can deduce that:

\begin{equation}alpha = 2\times\arctan(\frac{L}{2D})\end{equation}

With my phone camera, I measured $L = 480 mm$ and $D = 320mm$. This give an horizontal field of view around 74°.

To get the focal length in pixel unit, just use $(1)$ with L being your pixel width and D your focal.

$f_{pix}=\frac{pixelWidth}{2*\tan(\frac{\alpha_{fov}}{2})}$

with:

- $f_{pix}$ focal in pixel

- $\alpha_{fov}$ fov measured in the previous step

On the 4K camera I found $f_{pix}=2548$ pixels.

Determine pixel density in $deg^{-2}$

With the camera focal, in pixels unit, we can estimate the number of pixels along a 1° line, at any eccentricity:

\begin{equation}g(ecc)=f_{pix}\times(\tan(ecc+0.5)-\tan(ecc-0.5))\end{equation}

with

- $ecc$ eccentricity in degrees

- $f_{pix}$ focal in pixel unit

To get an estimation of the density we just have to square the function above.

\begin{equation}d_{pix}(ecc)=g(ecc)^{2}\end{equation}

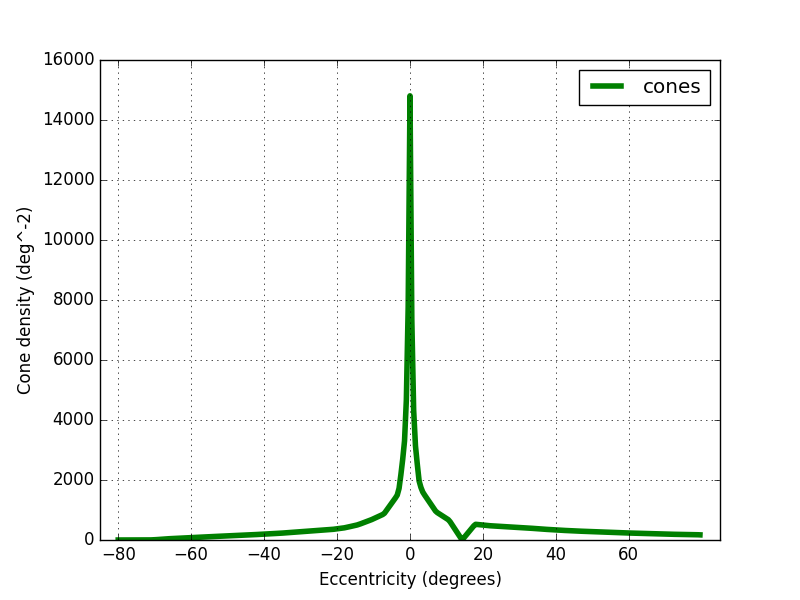

Convert cone density in $deg^{-2}$

To compare retinal and camera densities I converted all retinal densities from mm² to degree². To do this I used the polynomial approximation of Andrew B. Watson [10], based on Drasdo and Fowler [11] work.

$a(r_{deg}) = 0.0752 + 5.846\times10^{-5}r_{deg} - 1.064\times10^{-5}r_{deg}^2 +4.116\times10^{-8}r_{deg}^3$

with

- $a$ is the ratio of areas mm2/deg2

By multiplying the cone density by this factor I obtained the figure 6 below. The peak density is around 15 000 cones/deg² and drop to 400 at 20°.

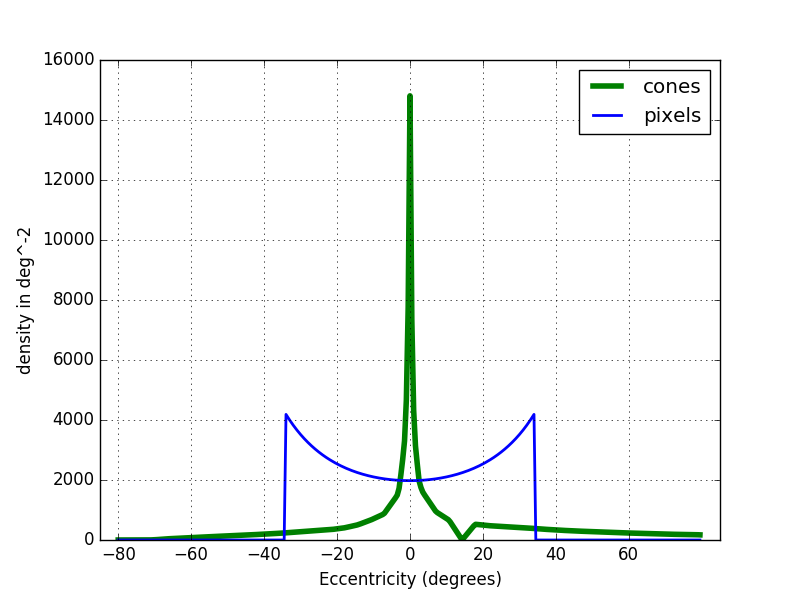

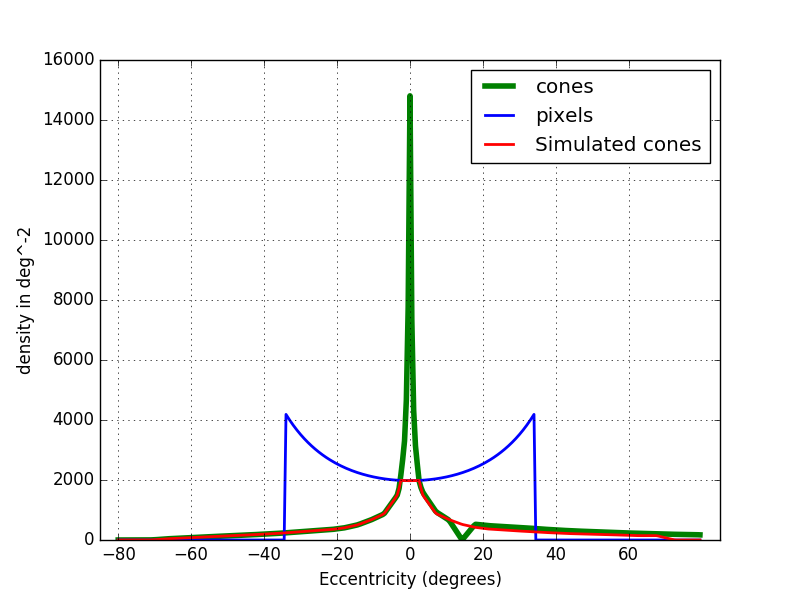

Pixel vs Cone density

In the figure below you can see the cone density in the eye and in the camera. The density in the fovea area (5°), is clearly not enough in the 4K camera. However the peripheral area can easily be simulated.

In the fovea area, the camera does not have a density high enough. Here there are two choices: either reuse the same pixel for several cones or drop some cones to match the camera density (cf figure below red curve).

Pixels by cone

To find the number of pixels by simulated cone, we just need to divide the camera pixel density by the simulated cone density (with the fovea flatten). It varies from 1 (fovea) to 22 pixels per cone.

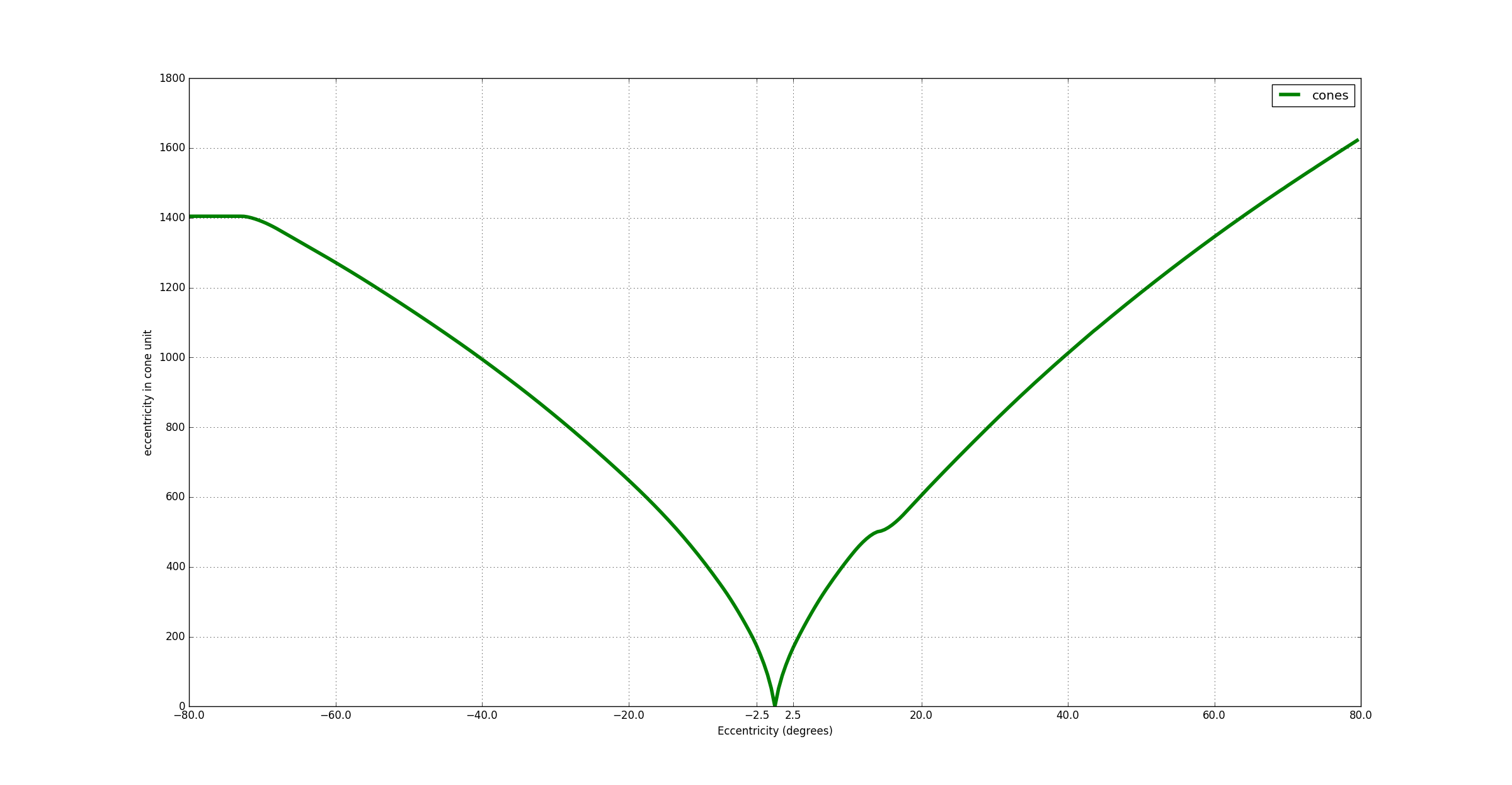

Cone density integral

In order to know the number of cones from the center of the fovea to any eccentricity, we can integrate the cone density curve (cf figure below). I took the values derived from Curcio work [12] provided by [10]. I used only the temporal and nasal cone densities. I have noticed that the fovea diameter in cone is only 340 cones instead of the 500 cones reported by a lot of papers. This difference may come from the data I used which does not represent the average cone distribution (or a mistake in my calculations ^^)…

$I_{cone}(ecc)=\int_{i=0}^{ecc}D_{cone}(i)$

with:

- $D_{cone}(x)$ density of cone by $deg^{-2}$

- $ecc$ eccentricity in $deg$

The curve is flattened around 16° due to the optic nerve.

Pixel density integral

The pixel density can also be integrated (fig below). Arround 36° the curve flatten because the end of the sensor array has been reached (3680/2 pixels for the 4K camera).

$I_{pixel}(ecc)=\int_{i=0}^{ecc}D_{pixel}(i)$

with:

- $D_{pixel}(x)$ density of pixels by $deg^{-2}$

- $ecc$ eccentricity in $deg$

Link between cone position and pixel position

To convert a cone position to a pixel position we use the function $P()$ below.

$P(coneRadialIndex)=I_{pixel}(I_{cone}^{-1}(coneRadialIndex))$

Cone distribution simulation

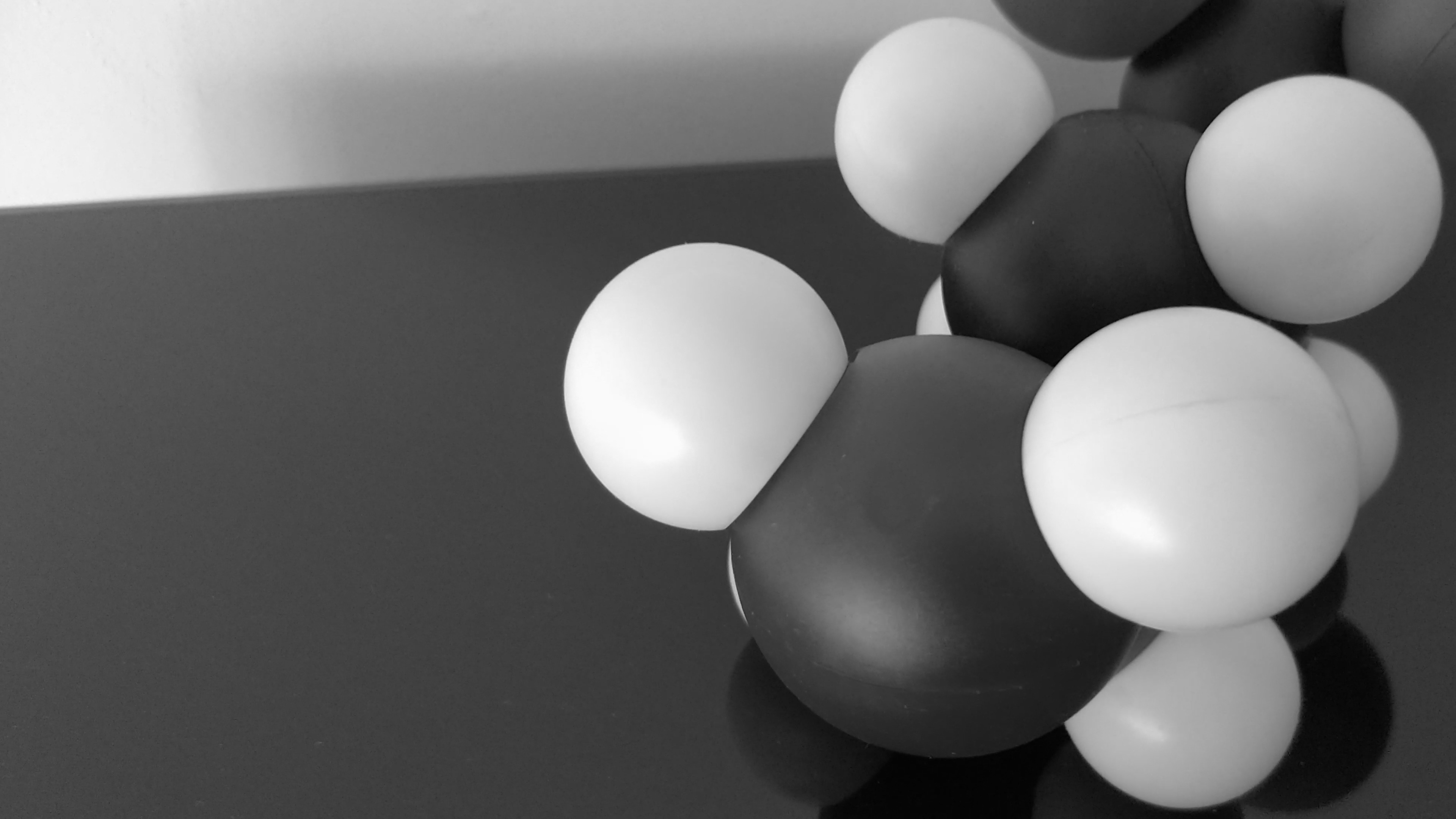

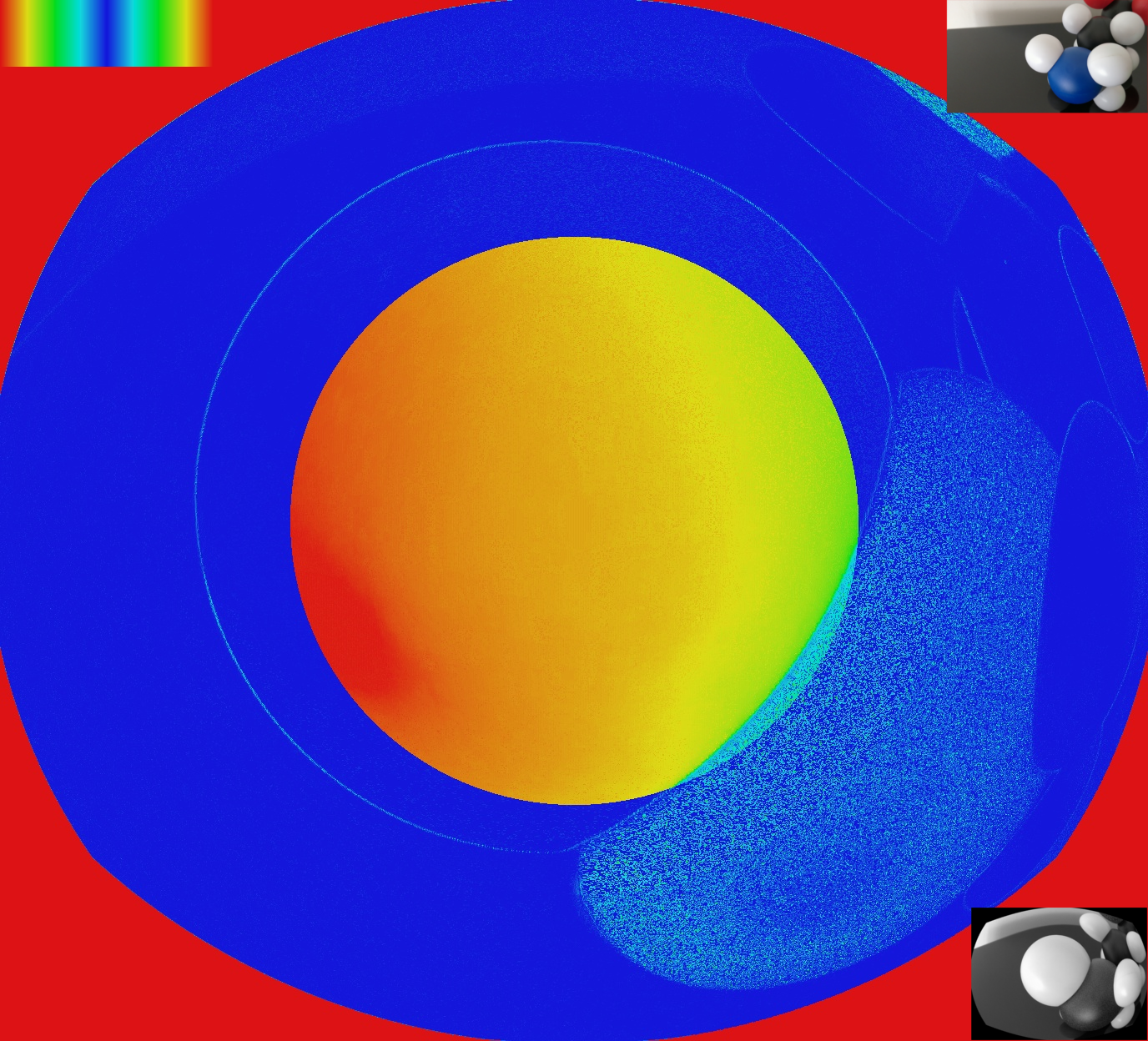

From the image above, the following cone simulation has been made:

Cones types simulation (S, L, M)

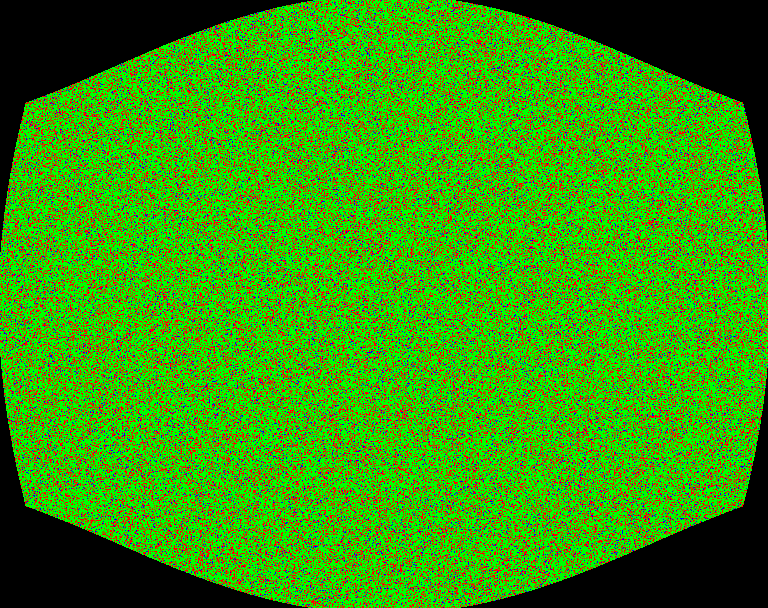

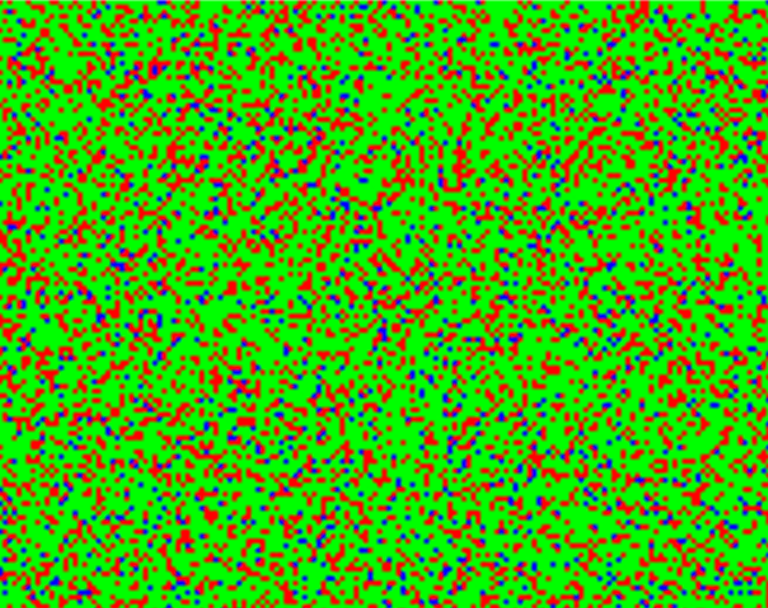

In the previous section, only the cone distribution was considered, not their type: blue, green, red (S,M,L). The proportion of cones inside the retina change a lot between two persons except the blue cones that represent only 5% of them. For the simulation I choose 70% M-Type cones, 25% L-Type cones and 5% S-Type cones.

For this simulation, the S,M and L types follow a random distribution, respecting the proportion 70%, 25% and 5%. There is a debate on whether or not the cones type distribution is random or not but here I decided to assume the randomness.

For each simulated cone, only one color of the pixel will be used. Red for the L-type cone, Green for the M-type and blue for the S-Type. You can see a representation below:

The real cone response is a membrane potential change that decrease rapidly. With the pixel we have a value between 0 and 254 that is stable under constant illumination. I will handle these differences by changing the processing of the upper layer (in next sections).

I also made a video of the cone simulation. The camera pass over green, blue and red objects.

Pixel limitations

In typical cameras, pixels are either triggered at once (global shutter) or line by line (rolling shutter). In the eye, each photoreceptors can be triggered at any time.

Cameras integrate light over time whereas cones respond to local luminosity changes. If you put your camera still to look one particular area and let it there, all pixels responses will not change until the environment changes. In the same situation the response of the cone will rise and then decrease overtime until it reach 0 millivolts. Thanks to this behaviour, cones react on local changes in intensity. Camera companies are trying to mimic this ‘local luminosity’ by using the HDR (High Dynamic Range) technology which take several pictures with different exposures and combine them afterward to avoid dark and saturated areas. Note: Event cameras may be more biomimetic but they are quite expensive with a low resolution.

For the similation I have no choice but to ignore these differences.

Bipolar cell modelisation ?

I will directly simulate ganglionar cells response from cones input. I will reproduce the On/Off center behaviour at the ganglionar level. The same is true for H cells.

Ganglionar cells modelisation

Ganglionar cells (GC) are the last cells in the retina. This means the brain receives their outputs.

Most of the GC have a similar ON/OFF-center and ON/OFF-surround response. The main differences come from their receptive field (I don’t consider response time here that can also be different).

To compute a ON-Center ganglionar response, we get the mean cone response in the central receptive field and subsctract it by the mean cone response of the peripheral receptive field.

As the simulated cone response is between 0 and 255, the result of this operation is between -255 and 255. In order to keep a value between 0 and 255 we center the value on 128. The value 0 means that the cell pulse at 0 Hz, the value 255 represent a 100Hz pulsation.

\begin{equation}\frac{1}{2}\times(\frac{\sum_{x=0}^{n_i} c_i(x)}{n_i}-\frac{\sum_{x=0}^{n_p} c_p(x)}{n_p})+128\end{equation}

with:

- $n_i,n_p$: total number of inner (or peripheral) cones

- $c_i(x)$: function returning the response from the xth inner cone

- $c_p(x)$: function returning the response from the xth peripheral cone

For an OFF center cell it becomes

\begin{equation}\frac{1}{2}\times(\frac{\sum_{x=0}^{n_p} c_p(x)}{n_p}-\frac{\sum_{x=0}^{n_i} c_i(x)}{n_i}) + 128\end{equation}

I use the ratio $\frac{1}{3}$ between the central and the surrounding receptive radius.

To apply this function for each of the three main ganglionar cells (midget, parasol and konio) I need to determine their receptive fields surfaces in cones.

Midget cells

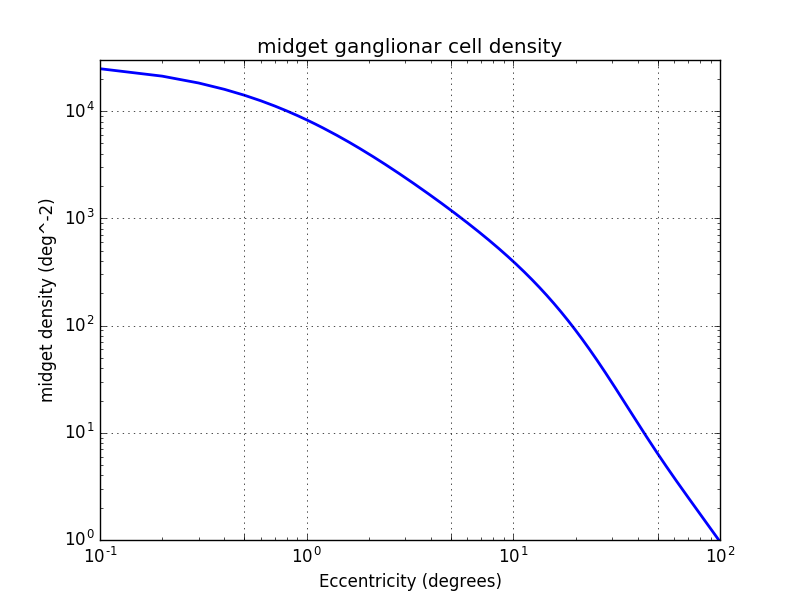

Midget cells distribution

ON-center midget cells are covering the whole retina without overlap. The same is true for OFF-center midget cells [2]. In the fovea there are 2 Ganglionars cells per cones, one ON and one OFF.

To get the cone/midget ratio outside the fovea, I used Andrew B. Watson Midget Retinal Ganglionar Cell Density [10]. This function estimate the midget GC density considering their receptive field location. It is not an easy task because the midget cells have a variable distance with their receptive field.

$density_{midgetf}(r_{deg},k)=2d_c(0)(1+\frac{r_{deg}}{r_m})^{-1}\times[a_k(1+\frac{r_{deg}}{r_{2,k}})^{-2}+(1-a_k)\exp(-\frac{r_{deg}}{r_{e,k}}))]$

with

- $d_c$ cone density per degrees². The fovea density peak is 14 804 cones/deg²

- $r2$ excentricity at which the density is reduced by a factor 4

- $ak$ is the weighting of the first term

- $re,k$ is the scale factor of the exponential

- $k$ represent the meridian

- $r_m$ 41.03°

For the simulation I used only the nasal meridian values given by [10].

- $a=0.9729$

- $r_2=1.084$

- $r_e=7.633$

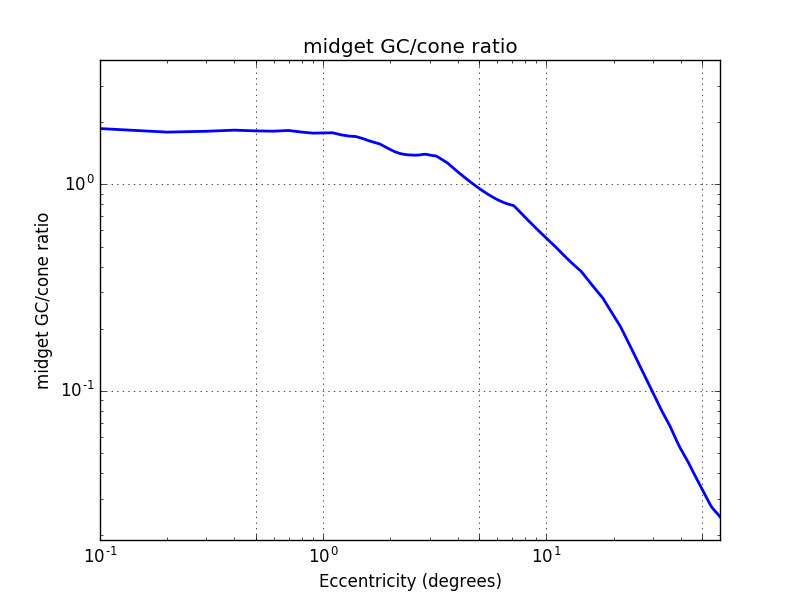

Now to get the ratio midget GC/cone we divide the curve above by the cone density. We can see that their are 2 midget ganglionar cells per cone in the fovea and then this ratio decrease rapidly. At 30° we have 100 cone per midget cell.

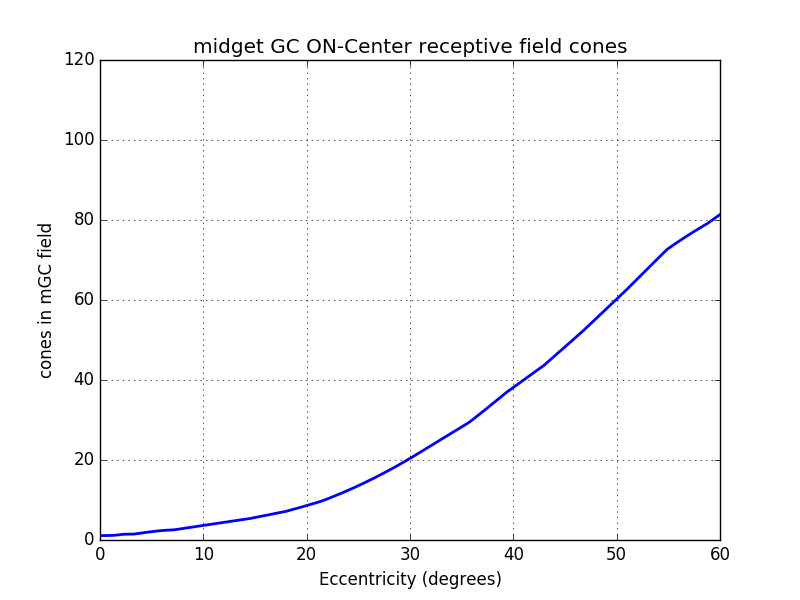

As ON-Center and OFF-Center mGCs covers the whole retina, it is possible to assume that one in two is ON and the other OFF. In this situation, the ON-Center mGC density is half of the total mGC density. The same is true for the OFF-Center mGC density. From these assumptions we can estimate the number of cones per midget ganglionar cell (figure below). Near the fovea each ON mGC has exactly one cone and 20 at 30°.

$midgetReceptiveCones(ecc) = 2\times \frac{densityCones(ecc)}{densityMidget(ecc)}$

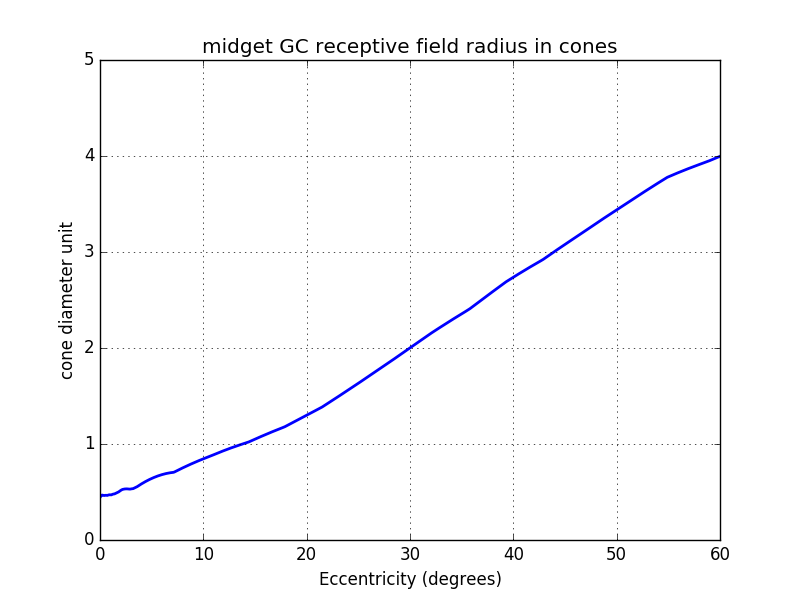

From the number of cones by mGC we can compute the radius in cones of each mGC (cf graph below).

Until now, we considered only the total radius of the mGC receptive field. Some studies [TODO add ref] have shown that there is a ratio of 1/3rd between the central and the peripheral diameter.

Midget Notes

Midget cells never have a S cone in their inner area [9] however they can have ones in their outer area.

OFF-center cells have a smaller dentric field and higher cell density (1.7 times more [2]). I did not considered this for now but It could be more interresting because if you keep a ratio of 1.0, the ON and OFF responses are just the opposite, It does not bring additionnal infos.

Midget cell simulation results

For the visualisation of the midget ganglionar cell response, I used a colormap (shown in the top left corner of the figure below). If the ganglionar cell has a mean frequency response, the color is blue. The further you get from this value the more red it becomes. By doing this we cannot distinguish high/low reponses. However, as the ON and OFF have identical receptive fields, their response are always opposite. This representation is not perfect but it is the best I have found so far.

As you can notice there is a central disk, it represents the area where one midget cell have only one central cone without any surrounding. Once you cross this area, midget cells responds to L/M oppositions.

Here a video with the mGC response.

Parasol cells

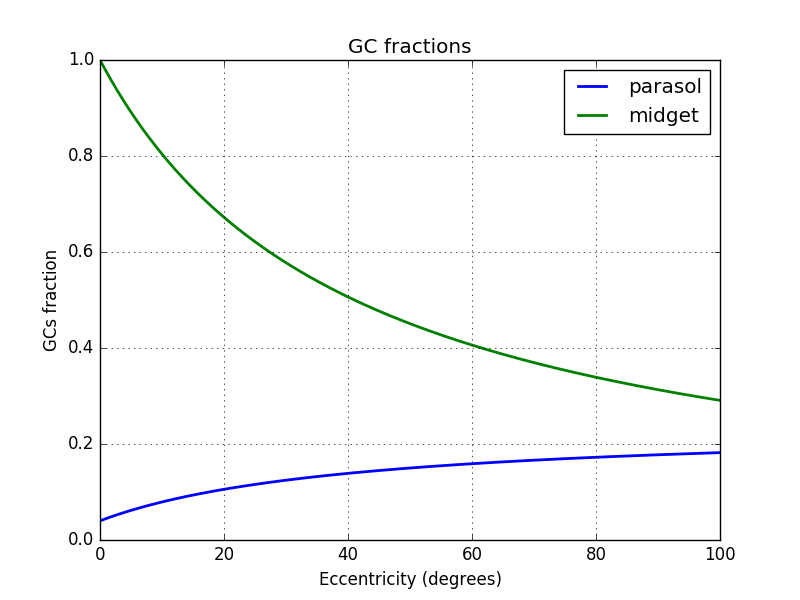

Like midget cells, ON and OFF center parasol cells are covering the whole retina. However, unlike midget cells, parasol cells with the same type (ON or OFF) have their receptive field overlapping [13]. The distance between 2 neighbors cells center of the same type, represent half of their receptive field diameter.

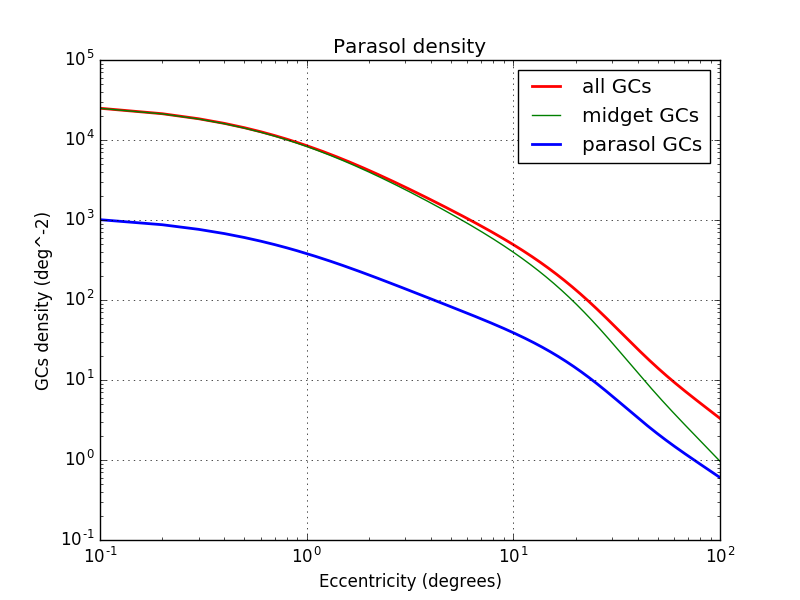

For the parasol cell, I did not find yet a study that shows the continuous distribution. I will use global statistics to approximate this distribution.

| position | midget qty | parasol |

|---|---|---|

| fovea | 95% | 5% [13] |

| outside fovea (up to 50°) | 45% | 15% [13] |

| total (up to 50°) | 80% | 10% |

I will assume that the parasol density is a constant fraction of the remaining ganglionar cells that are not midget ones, plus a constant fraction of all ganglionar cells. I have no clues if it is the case.

$density_{parasol}=C\times density_{all}+(density_{all}-density_{midget})\times K$

I find $C$ and $K$ using 2 positions

- at 2.16° where $density_{parasol} = 5\%$ of $density_{all}$

- at 50° where $density_{parasol} = 15\%$ of $density_{all}$

I choose 2.16° because it is at this position that the midget cells represent 95% of all cells.

This gives me $C=0.04$ and $K=0.2$. You can find below the fraction of parasol cell using this values:

Below the density of parasol cells:

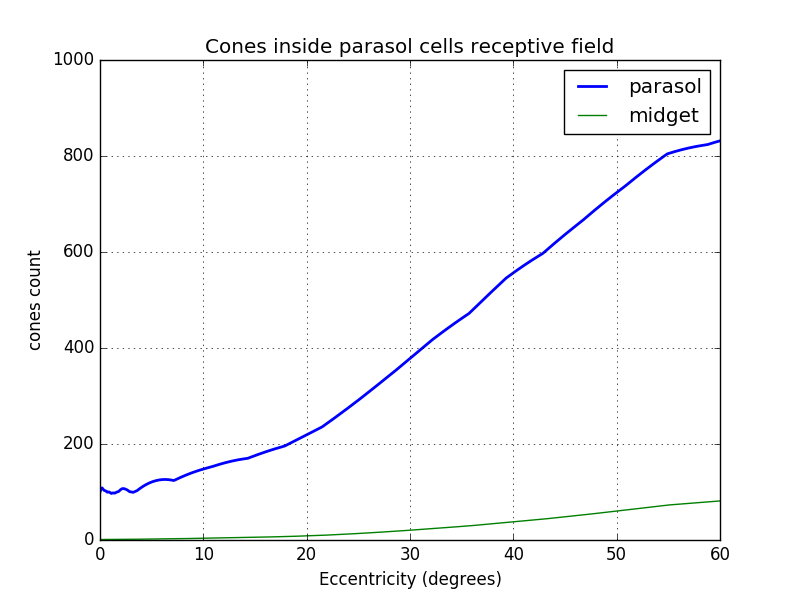

From the density, it is possible to estimate the number of cones inside parasol cell receptive field knowing that the distance between 2 neighbors cells of the same type represent half of their receptive field.

$parasolReceptiveCones(ecc) = 8\times \frac{densityCones(ecc)}{densityParasol(ecc)}$

Explanation of parasolReceptiveCones equation:

The density of same type cells (ON or OFF) is $\frac{densityParasol(ecc)}{2}=d_{p2}$. If we divide the cone density by this density we obtain the ratio cones per parasol cells without overlapping. The radius of non overlapping fields can be found with: $R_{nonOverlapping}=\sqrt(\frac{ \frac{densityCones}{d_{p2}}\times \pi\times 0.5^2}{\pi})$. To overlap until the next cell center, $R_{nonOverlapping}$ has to be multiplied by 2. This gives $R_{parasol}=2\times R_{nonOverlapping}$. From this radius, it is possible to get the cone surface of one p-cell: $A_p=\pi R_{parasol}^2$. To get the number of cone we just divide this area by the surface of one cone which is $S_c=\pi 0.5^2$. By combining these results we have

$parasolReceptiveCones(ecc) = A_p/S_c = \frac{\pi R_{parasol}^2}{\pi 0.5^2}$

$parasolReceptiveCones(ecc) = (2\times 2\times R_{nonOverlapping})^2 = 2^4\times \frac{densityCones}{d_{p2}} \times 2^{-2}$

$parasolReceptiveCones(ecc) = 2^2 \times 2 \times \frac{densityCones(ecc)}{densityParasol(ecc)}$

Parasol cells results

Below the output of parasol cells. I am no sure why I have still a bit of chromatic information while the parasol cell should not provide any, due to their larger receptive field. My explanation is that, due to the large ratio of 70% M cones, it is possible that some parasol cell center have mainly M cones in their center. So parasol cell do provide a low chromatic information. The other explanation is that the number of cone by parasol cell I found is too low…

Video on going

Parasol Notes

It seems that parasol cells are also sensitive to saptio-temporal changes that are not considered in the simulation yet.

bistratified cells (ongoing)

S cones have 2 bistratified cells [9] .

Notes

- ON cells have 20% larger diameter receptive field than off [3]. I did not simulate this for now.

- Midget ganglionar cell receptive field is ellipsoidal [15]. You can see images in [15]. [16] have a representation of midget, parasol and bistratified ganlionar cells surfaces.

-

[16] Talk aslo about the 17 ganglionar cells types: midget, parasol, sparse, giant sparse, smooth, Recursive, Broad thorny, thorny, bistratified recursive, bistratified large, bistratified small…

-

In the central visualfield, 80% of the par-vocellular LGN neurons showed center surround segregation of Land M cone input. [4]

-

In the peripheralretina between 20 and 50 degrees of eccentricity, Martin et al.(2001) found that 80% of tonically responding, presumably midget, ganglion cells were opponent.

-

tonically responding means constant output under constant illumination. It should be midget cells

-

The arrangement of ganglion cells on the retinal surface is not spatially random. Each cell type is distributed in an ordered mosaic. Within each mosaic, cells tend to be spaced apart so as not to occupy a neighboring cell’s territory (72). Characteristically, the “nearest neighbor distances” are greater than would be expected of a random distribution. Cells of different types may be closely spaced, however. The mosaics of different cell types are said to be independent. Receptive and dendritic fields of cells within a mosaic partially overlap [5]

-

ON- and OFF-center cells appear mutually indifferent to each other’s presence and may approach closely without an exclusionary zone. These cell types are said to occupy independent lattices [5]

-

Cross-correlations are generated from simultaneous recording of two ganglion cell spike trains. Impulses from the first cell set the zero time around which impulse firing rate histograms for the second cell are generated. The histogram is accumulated for every impulse fired by the first cell. When two neighboring ON-center cells are so paired, a large central peak in firing rate for the second ON cell is seen (Fig. 41, red, left). A similar pattern is seen when two OFF cells are recorded (Fig. 41, blue right). In both cases, increased firing rate represents an increased likelihood that if one cell fires, the other will also. Impulse generation in the two cells is not independent. The cells share common excitatory inputs sources and are likely to be simultaneously excited. [5]

-

Ganglion cells develop a high frequency (~100 Hz) oscillatory firing pattern when presented with localized bright stimuli. [5]

-

How many Ganglionar cells: 4 millions cones -> 1.6 million optic nerves [6].

- Midget cell receptive radius: Between 2 and 6 mm eccentricity, midget cells showed a steep, IO-fold increase in dendritic field size, followed by a more shallow, three- to fourfold increase in the retinal periphery, attaining a maximum diameter of -225 Am. [4]

| position | diameter | field of view ° | cone density |

|---|---|---|---|

| fovea FAZ | 0.5 mm | 1.5° | $150 000/mm2$ |

| fovea total | 1.2 mm | 5° | |

| macula | ~ 3mm |

| position | ratio GC/cone |

|---|---|

| 2.2° | 2:1 |

| diameter | field of view ° |

|---|---|

| 0.5 mm | 1.5° |

| 1.2 mm | 5° |

| 1.7 mm | 6° |

| 4.5 mm | 18° |

| eccentricity (mm) | midget dentric field size $(\mu m)$ | midget dentric field increase factor |

|---|---|---|

| 0 to 2 | 5-10 | x 1 |

| 2 to 6 | 50-80 | x 10 |

| more | up to 225 | x 30 - 40 |

Cone diameter varies from 0.5 to 4.0 µm

Cone pedicles are large, conical, flat end-feet (8-10 µm diameter) [8]

S-Cone remains isolated to the ganglion cell level too, due to connections with a specific ‘S-cone bipolar cell [8]

Biblio

[1]The brain from top to bottom

[8]webvision.med.utah.edu, Part II Anatomy and Physiology of the retina

[13]D.W. Marshak, in Encyclopedia of Neuroscience, 2009

[17]Popović, Zoran. (2003). Neural limits of visual resolution.

Annex

Conversion of eccentricities in millimeters to degrees

I used the formula $(A5)$ from [10]

$r_{mm}(r_{deg}) = 0.268r_{deg}+0.0003427r_{deg}^2-8.3309\times10^{-6}r_{deg}$ $(A5)$

with $r_{mm}$ being the distance in mm² and $r_{deg}$ being the equivalent in degree